We’re learning new and surprising things about our brains all the time. Psychologists, behavioral economists, and other scientists have sophisticated new tricks to reveal what’s going on inside our skulls, and their findings are publicized more widely than ever before. We reveal what we think we’re thinking through polls and quizzes, we’re ‘tricked’ into revealing what we’re really thinking through rigged puzzles and tests (exposing our biases, misconceptions, etc), we have easy access to massive databases of recorded human thought, and most amazingly, neuroscientists can now peer inside our brains while we’re thinking. And some of what we’re learning shows us that we’ve been wrong about ourselves in some really important ways.

So: do changes in our understanding of how the brain works mean we have to change, even to overhaul, how our justice system works too?

Our justice system! WHAT?!? Why would we want to change something so equitable, so honorable, it’s defined by the word ‘justice’?

Seriously, though: to many, this question is almost too scary to ask.

Won’t we send the signal that we’re no longer tough on crime by even proposing such a project? Isn’t our justice system pretty good as it is? Occasional ‘bad apple’ cops, perjuring witnesses, and corrupt prosecutors aside, our justice system is founded on the wholesome principle of personal responsibility: if we do wrong, we pay the price. A central feature of our justice system, after all, is the right to a fair trial by a jury of our peers. And as a society, we’ve taken great pains to ensure that everyone charged with a crime can get a fair trial. Our standards of evidence are pretty high: there must be eyewitness testimony and plenty of it. Forensic evidence is carefully collected and thoroughly analyzed, from blood to fingerprints to DNA to the tiniest of hairs and fibers. Everyone is entitled to legal counsel, even if the taxpayers have to foot the bill. Children and the mentally disabled are, properly, not tried as if they have the same level of responsibility as fully capable adults. The convicted have a right to appeal if they present evidence that their trial was unfair or if they can demonstrate innocence. And so on.

personal responsibility: if we do wrong, we pay the price. A central feature of our justice system, after all, is the right to a fair trial by a jury of our peers. And as a society, we’ve taken great pains to ensure that everyone charged with a crime can get a fair trial. Our standards of evidence are pretty high: there must be eyewitness testimony and plenty of it. Forensic evidence is carefully collected and thoroughly analyzed, from blood to fingerprints to DNA to the tiniest of hairs and fibers. Everyone is entitled to legal counsel, even if the taxpayers have to foot the bill. Children and the mentally disabled are, properly, not tried as if they have the same level of responsibility as fully capable adults. The convicted have a right to appeal if they present evidence that their trial was unfair or if they can demonstrate innocence. And so on.

Even granting all of these and setting them aside for now, a common objection to the current justice system in general is that the underlying concept of personal responsibility is a myth. We’re not responsible since everything we think and do is determined by laws of cause and effect. So, we have no free will, and if we have no free will, the whole concept of moral accountability, of responsibility for our actions, doesn’t make sense. The justice system ends up, then, having nothing to rightfully judge or punish. Let’s explore this for a moment.

What do we mean by personal responsibility? We mean that it’s we that did the thing, it’s we who understand that there are alternatives available to us, and it’s we that could, at least conceivably, have done otherwise. This is true even if our personalities, past experiences, and current circumstances make it unlikely we would have chosen otherwise. It’s reasonable to assign responsibility for actions, since it deters us from making bad choices, and motivates us to inculcate better habits in ourselves. Assigning responsibility does not mean we must ruthlessly punish all who do wrong; it means that we can make reasonable demands of one another as the circumstances warrant, be it punishment, recompense, an apology, or an acknowledgement of responsibility.

Who’s responsible for our actions, then? We all are, so long as we are capable of understanding what we should do and why (whether or not we understood at the time), and so long as we could have chosen to do otherwise. Unless immaturity, injury, or illness makes it impossible, or nearly impossible, to control our actions,

all persons who are free to make their own choices can and should be held responsible for those choices. (I argue for this more fully in ‘But My Brain Made Me Do It!’)

By the way, that’s why I disagree with many who think that psychopaths shouldn’t be held responsible for their actions. Although it may be more difficult for the psychopath to do the right thing, to respect the rights of others, it’s still in their power to do so, so long as they are capable of reason. We don’t let other people off the hook just because they don’t feel like doing the right thing; and all people, psychopaths or not, often find the wrong thing to do irresistibly attractive, and the right thing difficult. As long as anyone demonstrates sufficient intelligence to understand that the rules apply to them, it doesn’t matter that they wish it didn’t, or feel like it shouldn’t. After all, psychopathy is

characterized by lack of empathy, not intelligence.

The impartiality that underlies all morality, as in A owes a moral or legal duty to B, so B owes an equal moral or legal duty to A, is a simple and logical equation that any minimally intelligent adult person can grasp, psychopath or not.

So if the concept of personal responsibility, or moral accountability, is generally a good one, and our society is so committed to creating a fair justice system, what’s the problem with it? Here’s one of the main problems: many of our laws and practices are based on a poor understanding of how memory works, and an underestimation of how often it doesn’t work. For example, many of our law enforcement tactics are virtually guaranteed to result in unacceptably high rates of false convictions through their tendency to influence or convince suspects and eyewitnesses to remember details and events that never happened. Police interrogators can and do legally lie to their subjects in the attempt to ‘get them to talk’, under the assumption that false information has little to no effect on the person being interrogated other than to coax or scare the truth out of them. Until very recently, we didn’t understand how malleable memory really is, how easy it is in many circumstances to get others to form false memories by feeding them information. Courts all over the United States, even the Supreme Court, have upheld such deceptive techniques as lawful, yet we have mounting evidence to show these techniques have the opposite of their intended effect – unless, of course, the intended effect is any conviction for crime, not just the true one.

Here’s one of the main problems: many of our laws and practices are based on a poor understanding of how memory works, and an underestimation of how often it doesn’t work. For example, many of our law enforcement tactics are virtually guaranteed to result in unacceptably high rates of false convictions through their tendency to influence or convince suspects and eyewitnesses to remember details and events that never happened. Police interrogators can and do legally lie to their subjects in the attempt to ‘get them to talk’, under the assumption that false information has little to no effect on the person being interrogated other than to coax or scare the truth out of them. Until very recently, we didn’t understand how malleable memory really is, how easy it is in many circumstances to get others to form false memories by feeding them information. Courts all over the United States, even the Supreme Court, have upheld such deceptive techniques as lawful, yet we have mounting evidence to show these techniques have the opposite of their intended effect – unless, of course, the intended effect is any conviction for crime, not just the true one.

The fact that human memory is as undependable as it it seems counterintuitive, to say the least. It’s true that we can be forgetful, that we trip up on unimportant details such as the color of a thing, or the make of a car, or someone’s height. But surely, we can’t forget the really important things, like what the person who robbed or raped us looks like, or whether a crime happened at all, especially if we’ve committed it ourselves. Yet sometimes it’s the accumulation of small, relatively ‘unimportant’ misremembered details that leads us to ultimately convict the wrong people, and the evidence piling up also reveals that we do, in fact, misremember important things all the time. And people are losing their reputations, their liberty, their homes and money, even their lives, because of it.

Before we look at this evidence, let’s explore, briefly, how we think about memory, and what we are learning about it.

Not so long ago, working theories of human memory rather resembled descriptions of filing systems or of modern computers; many still think of it that way. We thought of the brain as something like a fairly efficient librarian or personal secretary neatly and efficiently storing important bits of information, such as memories of things that

happened to us, images of the people and places we know or encounter in significant moments in our lives, and so on, in a systematic way that would facilitate later retrieval, and most importantly, retrieval of reliable information. ‘Unimportant’ memories, or the less significant details of memorable events, were thrown away by forgetting, so that brain power wouldn’t be wasted on useless information. Sometimes we’d find old memories stuffed away in the back of the file or pushed off to a corner somewhere, a little difficult to retrieve after much time had passed, but still accessible with some effort. But important memories were stored more or less intact and discrete from one another, so if we remembered something at all, we’d remember it fairly correctly, or at least, the most important details of it. After all, it wouldn’t make sense for our internal secretary to rip up the memory file into little pieces and stash it all over the place helter-skelter, or to cross out random bits or even doctor the files from time to time, would it? And we certainly couldn’t remember things as if they actually happened to us if they didn’t. It seems all wrong, that evolution (or an intelligent designer, if that’s your thing) would give us an inefficient, inaccurate, dishonest, or mischievous keeper of that most cherished, most self-defining component of ourselves, our life’s memories.

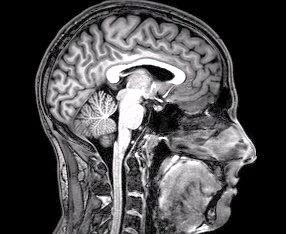

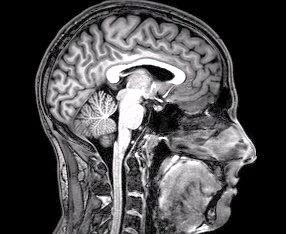

But now we know that memory works differently in many ways than we thought. How do we know this? For one, we’re now looking inside the brain as we think, and brain scans show that the process of recalling looks different in practice than we might have expected if some of our old theories were true. But more revealingly, we’re taking a closer look at our cognitive blunders. Like many other discoveries in science, we find out more and more about how something works, honing in on it so to speak, by examining instances where it doesn’t work as it should if our theories, or common sense, were correct.

As we’re finding out that memory doesn’t work as we thought, we’re also realizing how heavily our justice system relies on memory and on first-person accounts of what happened. We place a very high value on eyewitness testimony and confessions in pursuing criminal convictions, again, based on faulty old assumptions about how memory works, and how accurately people interpret the evidence of their senses. Yet as we’ll see, people have been falsely convicted because of these, even when other evidence available at the time contradicted these personal accounts of what happened. As our justice system places undue faith in memory and perception, despite their flaws, in what other ways is it built on wrong ideas about how the mind works?

Neuroscience, the quest to understand the brain and how it works, was founded on case studies of how the mind to appeared to change when the brain was injured. Traditionally, the mind was thought of as a unified thing that inhabits or suffuses our brain somewhat as a ghost haunts an old house. If part of the house burns down, the ghost is the same: it can just move to another rooms. But careful observers, early scientists, noticed that an injury to a part of the brain affects the mind itself. When particular parts of the brain were injured, patients lost specific capabilities (to form new memories, for example, or to recognize faces, or to keep their temper). Sometimes, the personality itself would change, like from friendly to unfriendly or vice-versa. Or in the case of split-brain patients, they would simultaneously like and dislike, believe and not believe, or be able and not able to do certain things, depending on how you ask, or sometimes just depending on what side of the body you address them from! Gradually, we came to understand that the mind is something that emerges from a physical brain, from the way the brain’s parts work together, and is entirely dependent on the brain itself for its qualities and for its very existence.

And as we discussed, it’s not only neuroscientists with their fMRIs that are revealing how the brain works. The criminal justice system has amassed mountains of evidence that shows us how often the human brain does its job and helps us ‘get the right guy’, and how often it fails. With advances in technology, such as DNA testing, more sophisticated firearms testing, expanded access to files and records, and many other newly available forensic tools, we’re discovering an alarming number of false convictions, not only of people currently imprisoned, but sadly, of those we’re too late to help. Most of these are the direct result of basic errors of our own faulty brains.

(I’ve more briefly discussed the issue of false convictions for crime in an

earlier piece, stressing the importance of knowing the ways our criminal justice system fails, and one of the most effective ways society can keep itself informed.)

Two of the main culprits in false convictions are false memory and misperception, the one resulting from errors in recall, the other resulting from bias or the misinterpretation of sense evidence.

For example, let’s consider the case of a rape victim and the man she falsely accused, who went on to work together for reform in certain law enforcement practices. For years, Jennifer Thompson-Cannino was absolutely sure that Ronald Cotton

had raped her, and her identification of him led to his conviction for the crime. After all, as she said, and as the court agreed, one couldn’t forget the face of someone who had done this to you, committed such an intimate crime inches away from one’s own eyes, could they? Eleven years later, the real rapist was identified when the original rape kit sample was re-tested to confirm a DNA match. Over time, Thompson-Cannino had convinced herself, honestly by all accounts, that Cotton’s face was the one she originally saw, and each time she looked at it, the more she ‘recognized’ it as that of her rapist, and the more strongly she associated this recognition with the rest of her memories of the crime. Fortunately, she’s one of those rare people who are able to fully admit such an egregious mistake, and she has joined with Cotton to call on police jurisdictions to change the way suspect lineup identifications are conducted. Thompson-Cannino and Cotton learned a hard lesson about the fallibility of memory, and how preconceived notions (in this case, that the police

must be right) can withstand the rigors of the courtroom and still lead to

very wrong conclusions.

But we can imagine that such a mistake must happen from time to time, as in Cotton’s case, when the supposed criminal looks quite a bit like the real one, and the array of circumstances that led to the whole mix-up were so unusually convoluted. But, surely, a lot of people couldn’t all remember the same crime, or series of crimes, wrongly, and describe it mostly the same way when questioned separately? Well, that happens too. The McMartin case of the early 1980’s was just the first of a string of cases which resulted in hundreds of people being convicted for the rape and torture of small children, usually entirely based on the purported evidence of the ‘victims’ ‘ own memories, or those of their family members. The ‘victims’, mostly young children, told detailed, lurid, horrific stories of events that most people would consider beyond the imaginative scope of innocent children. Over time, as those convicted of the crimes were pardoned, exonerated, or usually just had the charges dropped without apology (some still languish in prison, or are confined to house arrest, or are barred from being with children, including those of their own family), videos and transcripts of ‘expert’ interviews with these children found them leading their interviewees on. Professional psychologists and interrogators were found to be influencing children to form their own false memories, even planting them whole-cloth

, rather than drawing them out as most people thought they were doing. This includes the professionals themselves! Some of these children, now grown, still ‘remember’ these events to this day, even those who now know they never happened.

Okay, now we’re talking about children, and we all know children are impressionable. But usually it’s adults who give evidence in such important cases, and though they might be fuzzy on the details when it comes to what other people did, they know what they themselves did, right? Well, here again, no, not necessarily. In Norfolk, Virginia, eight people

were convicted of crimes relating to the rape and murder of one woman, based on the first-person confessions and testimony of four military men. These men, who had tested sound enough of mind and body to join the Navy, were convinced by police interrogators, one by one, to ‘reveal’ that they and several others had, by a series of coincidences, formed an impromptu gang-rape party that managed to conduct a violent crime that lasted for hours, while leaving little destruction or evidence behind. Although these confessions didn’t match the evidence found in the crime scene, didn’t match the other confessions, or were contradicted by alibis, all were found guilty on the common-sense and legal assumptions that sane, capable people don’t falsely accuse themselves.

Yet as in the Norfolk case, the case history of our criminal justice system reveals that with the right combination of pressure, threats, assertion of authority, and personality type, just about anyone can be pushed to confess to committing even a terrible crime, and worse yet, become convinced that they did it (as one of the men did in the Norfolk case). The Central Park Five, as they are often called, were five teenage boys, aged 14 to 16, who were convicted of the rape, torture, and attempted murder of a woman in Central Park in New York City. The justifiably horrified public outrage at this crime, combined with frustration over a rash of other crimes throughout the city, put a lot of pressure on law enforcement to solve the crime in a hurry. These five boys had already been picked up by police officers in suspicion of committing other crimes that night, of robbery and assault, among other things, and when the unconscious, severely beaten woman was found, the police hoped they had her attackers in custody already. After hours of intensive untaped interrogation, all five eventually confessed, implicating themselves and each other. They were convicted, despite the fact that the blood evidence matched none of them, and their confessions contradicted each other in important details.

False memories and false confessions are only two of the ways our fallible brains can lead us astray in the search for truth. Human psychology, so effective at so many things, is also short-sighted, self-serving, and

wedded to satisfying and convenient narratives, to a fault. Law enforcement officials in all of these cases were convinced that their theories of effective interrogation were right, and that their perceptions of the suspects were right. The prosecutors were convinced that the police officers had delivered the right suspects for trial. The legislators that made the laws, and the courts that upheld them, were convinced they were acting in the best interest of justice. And as we’ve seen, all of these were wrong.

As just about everyone was who were involved in bringing Todd Willingham to court, and in condemning him to die for the murder of his own children by arson. By all accounts, Willingham didn’t act as people would expect a grieving father to act, especially one who had escaped the same burning house his children had died in. Yet it was one faulty theory after another, from pop psychology and preconceived notions about that ‘real’ grief looks like, from bad forensics to a poor understanding of how an exceedingly immature and awkward man might only

appear guilty of an otherwise unbelievable crime, that led to his conviction and execution by lethal injection.

But the problem of false conviction for crime is much, much larger than we might suppose from the cases we’ve considered here: these were all capital crimes, and as such were subject to much more rigorous scrutiny than in other cases. If wrongful convictions are known to happen so often in the case of major crimes, we can reasonably extrapolate a very high number of false arrests, undeserved fines, and especially, false plea deals, in which people innocent of the relatively minor crimes they’re accused of are rounded up, charged, and sentenced.

Plea bargaining presents a special problem: suspects are persuaded to plead guilty and accept a lesser sentence than the frighteningly harsh one they’re originally threatened with, and in jurisdiction after jurisdiction, we’re finding that huge numbers of innocent people are sent to prison every year through this method. All of this results from a blind zeal to promote justice, or at least, the appearance of justice in the interest of feeling secure, of more firmly establishing authority, or of fulfilling the emotional need to adhere to comforting social traditions.

So how do we need to change our attitude towards our criminal justice system, in the pursuit of actual justice? A proper spirit of epistemic humility, a greater concern for those who may have been wrongfully convicted, and a real love of justice itself (rather than the mere show of caring about justice that the ‘tough on crime’ too often consists of).

But we still are left with the practical task of protecting ourselves and one another. Positive action must be taken, or crime will run rampant, being unopposed. But that doesn’t mean hold on to old ideas and practices because we like them, because they are familiar and ‘time-tested’, and make us feel safe. This includes the death penalty, which shuts out all possibility that we can remedy our mistakes.

Here’s one general solution: approach criminal justice as we do science itself, where we accept conclusions based on the best evidence at the time, but founded on the idea that all conclusions are contingent, are revisable if better, compelling, well-tested evidence comes along. We need a justice system that assumes the fallibility of memory and perception, and builds in systematic corrections for them.

And we need a system that doesn’t just pay homage to this idea: we need to build one that allows for corrections, and not in such a way that it takes years, if ever, to release someone from prison or clear someone’s name if the evidence calls for it. Many would say that the system already works this way: look at how many appeals are available to the convicted, and how many hundreds of people have already been exonerated of serious crimes. But it doesn’t work that way in almost all circumstances. It takes anywhere from months to several years after actual innocence is established to actually release a wrongfully convicted person from prison. For all those not so lucky as to have their innocence proved: most cases don’t have DNA evidence available to test to begin with, at least that would definitively prove guilt or innocence. And even in the rare cases such evidence is available, most is never re-tested to begin with, since the bar for re-evaluation of evidence is so high. Or, the evidence that was available is destroyed after the original conviction and is unavailable for re-examination. Or, legal jurisdictions are so determined that their authority remain unchallenged that they make it extremely difficult, if not impossible, for prosecutors and law enforcement officers to be held accountable in any way if they make a mistake, and bend over backwards to make sure such mistakes are never revealed. And so on.

In short: we don’t just need a justice system that brings in science to help out; we need a justice system whose laws and practices emulate the self-correcting discipline of science, which, in turn, is derived from the honest acknowledgement of the limitations of our own minds.

*Listen to the podcast version here or on iTunes

* Also published at Darrow, a forum for thoughts on the cultural and political debates of today

~~~~~~~~~~~~~~~~~~~~~~

Sources and Inspiration

‘About the Central Park Five’ [film by Ken Burns, David McMahon and Sarah Burns],

PBS.org.

http://www.pbs.org/kenburns/centralparkfive/about-central-park-five/Berlow, Alan. ‘What Happened in Norfolk.’ New York Times Magazine. August 19th, 2007.

http://www.nytimes.com/2007/08/19/magazine/19Norfolk-t.html

‘The Causes of Wrongful Convictions’. The Innocence Project.

http://www.innocenceproject.org/causes-wrongful-conviction

Celizic, Mike. ‘She Sent Him to Jail for Rape; Now They’re Friends’ Today News, Mar 3, 2009.

http://www.today.com/id/29613178/ns/today-today_news/t/she-sent-him-jail-rape-now-th…

Eagleman, David. ‘Morality and the Brain’, Philosophy Bites podcast, May 22 2011.

http://philosophybites.com/2011/05/david-eagleman-on-morality-and-the-brain.html

‘The Fallibility of Memory’, Skeptoid podcast #446. Dec 23, 2014.

http://skeptoid.com/episodes/4446

Fraser, Scott. ‘Why Eyewitnesses Get It Wrong’ TED talk. May 2012

http://www.ted.com/talks/scott_fraser_the_problem_with_eyewitness_testimony

Grann, David. ‘Trial by Fire: Did Texas Execute an Innocent Man?’ The New Yorker, Sep 7, 2009

http://www.newyorker.com/magazine/2009/09/07/trial-by-fire

Hughes, Virginia. ‘How Many People Are Wrongly Convicted? Researchers Do the Math’. National Geographic: Only Human, Apr 28, 2014.

http://phenomena.nationalgeographic.com/2014/04/28/how-many-people-are-wrongly-…

Jensen, Frances. ‘Why Teens Are Impulsive, Addiction-Prone And Should Protect Their Brains’. Fresh Air interview, Jan 28th, 2015.

http://www.npr.org/blogs/health/2015/01/28/381622350/why-teens-are-impulsive-...

Kean, Sam. ‘These Brains Changed Neuroscience Forever’. Interview on Inquiring Minds,

Lilienfeld, Scott O. and Hal Arkowitz. ‘What “Psychopath” Means’.

Scientific American,

Nov 28, 2007.

http://www.scientificamerican.com/article/what-psychopath-means/

Loftus, Elizabeth. ‘Creating False Memories.’ Scientific American, Sept 1,1997. http://faculty.washington.edu/eloftus/Articles/sciam.htm

and

‘The Fiction of Memory’ TED talk. June 2013.

http://www.ted.com/talks/elizabeth_loftus_the_fiction_of_memory?

Perrilo, Jennifer T. and Saul M. Kassin. ‘The Lie, The Bluff, and False Confessions’. Law and Human Behavior (academic journal of the American Psychology-Law Society). Aug 24th, 2010.

https://www.how2ask.nl/wp-content/uploads/downloads/2011/10/Perillo-Kassin-The-Lie…

Possley, Maurice. ‘Fresh Doubts Over a Texas Execution’. The Washington Post, Aug 3, 2014.

http://www.washingtonpost.com/sf/national/2014/08/03/fresh-doubts-over-a-texas-execution/

Robertson, Campbell. ‘South Carolina Judge Vacates Conviction of George Stinney in 1944 Execution’, The New York Times, Dec. 17, 2014. http://www.nytimes.com/2014/12/18/us/judge-vacates-convict…

Shaw, Julia. ‘False Memories Creating False Criminals’. Interview, Point of Inquiry podcast.

March 2nd, 2015. http://www.pointofinquiry.org/false_memories_creating_false_criminals_with_dr..

‘The Trial That Unleashed Hysteria Over Child Abuse.’ New York Times, Mar 9th, 2014.

and video ‘McMartin Preschool: Anatomy of a Panic | Retro Report’

In ‘

In ‘

personal responsibility: if we do wrong, we pay the price. A central feature of our justice system, after all, is the right to a fair

personal responsibility: if we do wrong, we pay the price. A central feature of our justice system, after all, is the right to a fair